mininet中的网络实验,数据包走的是内核协议栈。其好处就是实际网络协议栈的性能。缺点就是,要验证新的想法,需要修改内核协议栈。而且关于内核中的数据,比如tcp的拥塞窗口数据,可以参考[4-5],能否用在mptcp上就不知道了。

1.试验步骤:

1安装mptcp协议栈,参考[1]

2安装mininet。

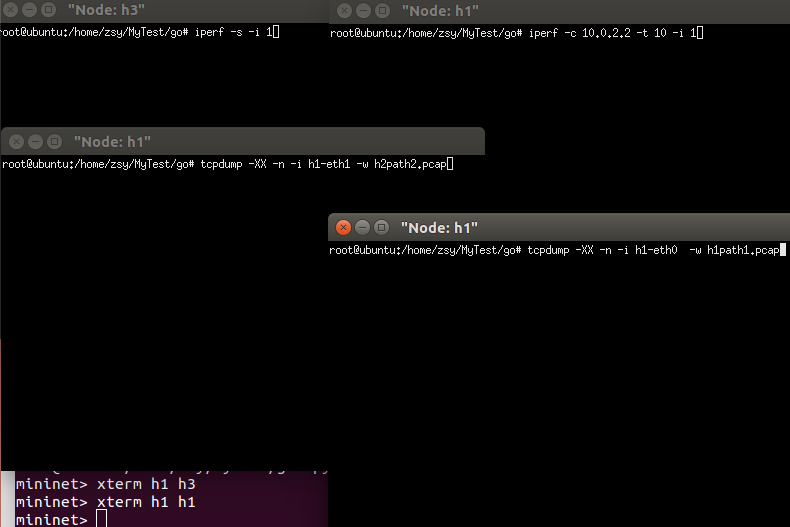

3运行柯拓补脚本[2],multihomed-4h.py脚本,启动iperf软件,可以测量节点间的带宽。

4在运行iperf的时候,采用xterm启动了两个控制台,xterm h1 h2。但是要抓包,需要另启动一个h1的控制台。

5在xterm h1 启动后,在控制台输入抓包包命令:tcpdump -XX -n -i h1-eth0 -w /yourpath/mptcp.pcap

multihomed-4h.py

#!/usr/bin/python

from mininet.topo import Topo

from mininet.net import Mininet

from mininet.cli import CLI

from mininet.link import TCLink

import time

##https://serverfault.com/questions/417885/configure-gateway-for-two-nics-through-static-routeing

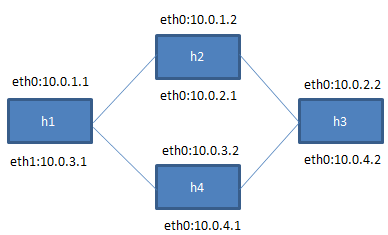

# ____h2____

# / \

# h1 h3

# \___h4_____/

#

max_queue_size = 100

net = Mininet( cleanup=True )

h1 = net.addHost('h1',ip='10.0.1.1')

h2 = net.addHost('h2',ip='10.0.1.2')

h3 = net.addHost('h3',ip='10.0.2.2')

h4 = net.addHost('h4',ip='10.0.3.2')

c0 = net.addController( 'c0' )

net.addLink(h1,h2,intfName1='h1-eth0',intfName2='h2-eth0', cls=TCLink , bw=2, delay='10ms', max_queue_size=max_queue_size)

net.addLink(h2,h3,intfName1='h2-eth1',intfName2='h3-eth0', cls=TCLink , bw=2, delay='10ms', max_queue_size=max_queue_size)

net.addLink(h1,h4,intfName1='h1-eth1',intfName2='h4-eth0', cls=TCLink , bw=2, delay='30ms', max_queue_size=max_queue_size)

net.addLink(h4,h3,intfName1='h4-eth1',intfName2='h3-eth1', cls=TCLink , bw=2, delay='30ms', max_queue_size=max_queue_size)

net.build()

h1.setIP('10.0.1.1', intf='h1-eth0')

h1.cmd("ifconfig h1-eth0 10.0.1.1 netmask 255.255.255.0")

h1.setIP('10.0.3.1', intf='h1-eth1')

h1.cmd("ifconfig h1-eth1 10.0.3.1 netmask 255.255.255.0")

h1.cmd("ip route flush all proto static scope global")

h1.cmd("ip route add 10.0.1.1/24 dev h1-eth0 table 5000")

h1.cmd("ip route add default via 10.0.1.2 dev h1-eth0 table 5000")

h1.cmd("ip route add 10.0.3.1/24 dev h1-eth1 table 5001")

h1.cmd("ip route add default via 10.0.3.2 dev h1-eth1 table 5001")

h1.cmd("ip rule add from 10.0.1.1 table 5000")

h1.cmd("ip rule add from 10.0.3.1 table 5001")

h1.cmd("route add default gw 10.0.1.2 dev h1-eth0")

h2.setIP('10.0.1.2', intf='h2-eth0')

h2.setIP('10.0.2.1', intf='h2-eth1')

h2.cmd("ifconfig h2-eth0 10.0.1.2/24")

h2.cmd("ifconfig h2-eth1 10.0.2.1/24")

h2.cmd("ip route add 10.0.2.0/24 via 10.0.2.2")

h2.cmd("ip route add 10.0.1.0/24 via 10.0.1.1")

h2.cmd("echo 1 > /proc/sys/net/ipv4/ip_forward")

h4.setIP('10.0.3.2', intf='h4-eth0')

h4.setIP('10.0.4.1', intf='h4-eth1')

h4.cmd("ifconfig h4-eth0 10.0.3.2/24")

h4.cmd("ifconfig h4-eth1 10.0.4.1/24")

h4.cmd("ip route add 10.0.4.0 dev h4-eth1") #via 10.0.4.2

h4.cmd("ip route add 10.0.3.0 via 10.0.3.1")

h4.cmd("echo 1 > /proc/sys/net/ipv4/ip_forward")

h3.setIP('10.0.2.2', intf='h3-eth0')

h3.cmd("ifconfig h3-eth0 10.0.2.2 netmask 255.255.255.0")

h3.setIP('10.0.4.2', intf='h3-eth1')

h3.cmd("ifconfig h3-eth1 10.0.4.2 netmask 255.255.255.0")

h3.cmd("ip route flush all proto static scope global")

h3.cmd("ip route add 10.0.2.2/24 dev h3-eth0 table 5000")

h3.cmd("ip route add default via 10.0.2.1 dev h3-eth0 table 5000")

h3.cmd("ip route add 10.0.4.2/24 dev h3-eth1 table 5001")

h3.cmd("ip route add default via 10.0.4.1 dev h3-eth1 table 5001")

h3.cmd("ip rule add from 10.0.2.2 table 5000")

h3.cmd("ip rule add from 10.0.4.2 table 5001")

net.start()

time.sleep(1)

CLI(net)

net.stop()

2.The tips to run this script

python multihomed-4h.pyxterm h1 h3

xrerm h1 h1

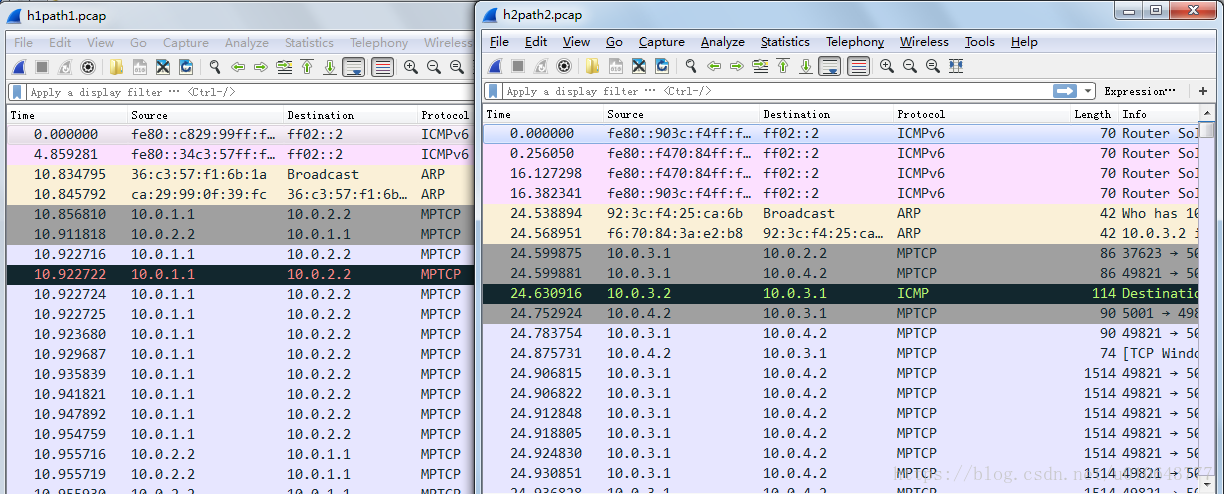

3.And the packets captured results

以上是在wireshark中打开的mptcp.pcap的截图,可以看到,网络层使用的协议栈是mptcp,这对于上层应用是透明的。